'Domain Clustering' is a Google update that was never officially announced, but one which has the potential to impact the search marketing landscape.

In this post, Lee Allen, Technical Planning Director and Matthew Barnes, SEO Executive at Stickyeyes, provide an in-depth analysis into this under the radar update...

Introduction

In May 2013, Matt Cutts officially announced a new version of Google's Penguin algorithm was set to roll out.

Google didn't waste any time with the roll out and around a week after Matt Cutts' announcement, the formal confirmation came that Penguin 2.0 was officially out in the wild.

With Penguin, Google is attempting a fairly ambitious effort to cut down on unnatural links, known as 'web spam'. At the same time, there was an under the radar update referred to as 'Domain Clustering'.

This update was never officially announced, although Matt Cutts did make a webmaster video entitled 'Why does Google show multiple results from the same domain?'.

In the video Matt talks about users being unhappy about seeing too many results from a single domain, stating "Once you've seen a cluster of four results from a given domain, as you click on subsequent pages we won't show you results again".

This seemed to be enough of an issue to kick the search quality team into action and roll out the next generation of the domain diversity algorithm.

Although it was announced that the new algorithm change would be rolling out relatively soon, there was no formal announcement to say if the domain clustering aspect actually happened, therefore we decided to dig deep into the data to investigate whether this was a hidden release.

Our Analysis

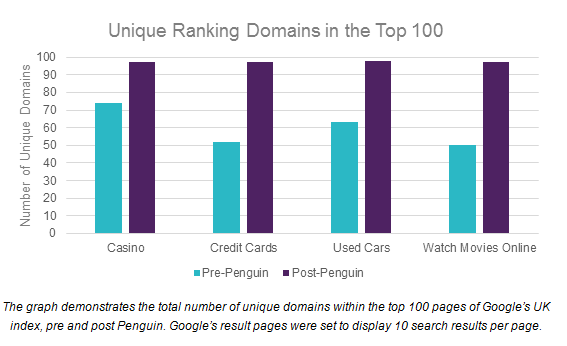

We extracted the top 100 daily rankings for 489 major keywords (in Google UK), across multiple industries, giving us the foundations to dig a little deeper. Firstly, we selected a few highly searched generic keywords to see if there were any identifiable changes.

Our initial findings were quite surprising. Nobody would have expected Google to make such a drastic change with this update, seeding nearly 100 (97 in most cases) completely unique domains into each search query. Almost an entirely unique index!

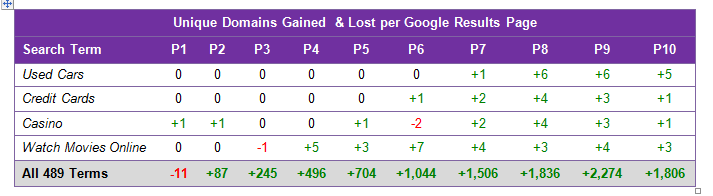

Since a few keywords began to show a small amount of insight, we decided to see how this appeared across our entire keyword set. The chart below looks at the average number of unique domains across the first 10 pages of Google's index.

These results clarify that this change definitely wasn't limited to a handful of keywords. Pre-penguin indicates that the algorithm didn't previously have a spread of results across multiple domains, therefore the average was relatively low.

We're now looking at a completely transformed set of results, with the average increasing as the visitor navigates to deeper pages. The average change on deeper pages is extremely interesting, with Google previously serving 3.8 unique domains on page 9, now becoming 10!

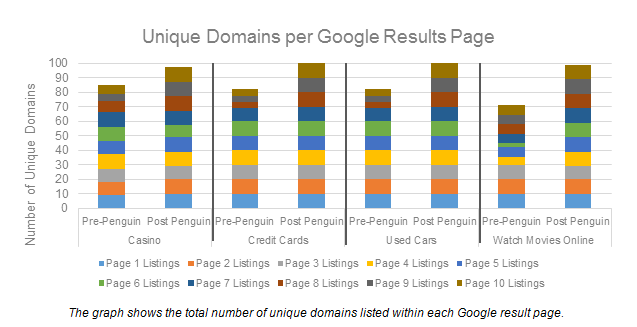

Below, we can see how this changed on a page-by-page basis for each of our initial sample terms. The main insight being the minor (if any) change within Google's first few pages, with the page-by-page change becoming more aggressive the deeper you delve into the index.

The evidence is pretty conclusive that the update has been rolled out, although it is not known why there was no formal announcement.

That being said, we are able to use this analysis to confirm Google no longer wishes to show too many resources from the same domain and instead is moving into a more diverse set of results for all queries.

Key findings and facts so far

- On average there are now 34.7 unique domains per 100 results as opposed to 19.3, meaning a number of terms weren't fully impacted, yet.

- 1,323 sites lost all their results. Only nine of these started with 10 or more results and 121 with three or more, possibly a combined blow along with the Penguin 2.0 update.

- 451 sites lost more than 50% of their results.

- 52% of the current index is occupied by new domains with 8,892 domains that didn't rank now displaying.

As this update caused a significant number of domains to be trimmed from search queries, the obvious question to ask is 'who was impacted?'.

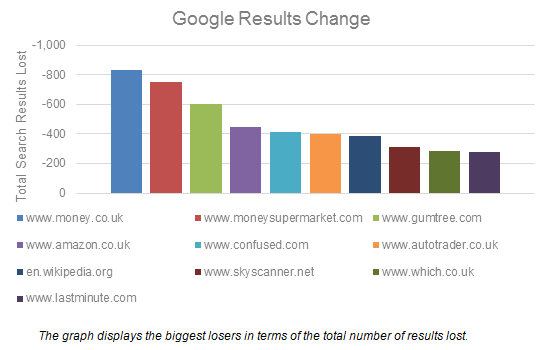

So, who are the potential losers?

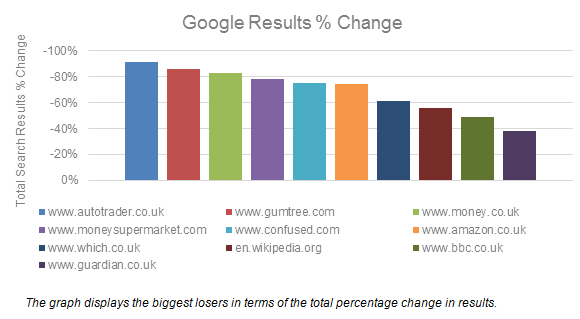

The graph below shows the domains with the biggest change, essentially who lost the most results across the analysed keywords.

Based on the amount of change the biggest loser is Money.co.uk, losing 830 results. Surprisingly, all of the listed domains are what you'd class as 'big brands', and have mainly lost ground within the deeper pages of the index.

For instance, for the term 'best travel insurance', Money.co.uk began with 33 results in the top 100. After the update we saw the site had been reduced to just one!

Now, although some sites suffered a large change in difference, others suffered a much larger percentage change. This is mainly because certain sites dominated so many more results than others to begin with.

In this case Auto Trader is the biggest loser with a 91% drop in results. Again, we see a similar development with the term 'used cars for sale' where Auto Trader dominated the SERP (65 results out of 100).

The new algorithm soon started replacing obliterating their results in favour of unique ones, reducing them to a couple of results.

When did this happen?

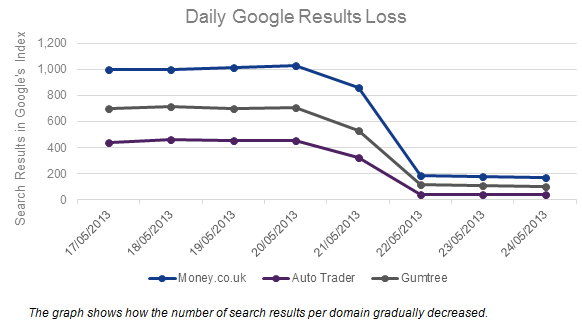

Taking a few of the biggest losers as examples we've plotted their result loss on a daily basis over this period.

Based on this data we're pretty certain that this update finished rolling out by the 23rd of May 2013, but the changes in results between the 20th and 23rd show a phased shift to the new index, the same date range Penguin went live.

Should Webmasters be worried?

For the majority, the level of change actually looks worse than the reality. As detailed previously, the update typically left the first two pages unaffected, focusing on cleaning up results from lower pages of the index.

Due to results residing past page two generally receiving an extremely low level of click-share, we anticipated that most of these sites won't notice the update at all, but we wanted to verify that.

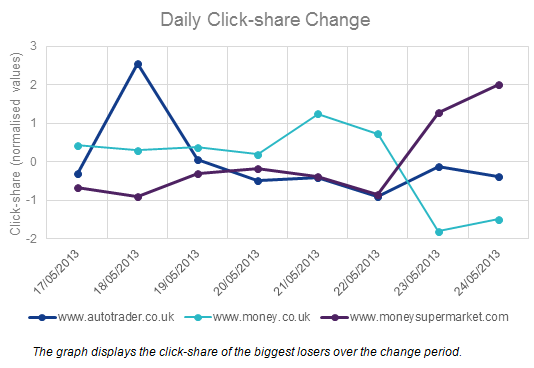

In the graph below, we can see each of the site's visibility scores over the same period for the same keyword set. Due to the varying ranges in volumes of click-share the scores have been normalised for comparison.

Strangely, three of the biggest losers have had a mixed bag in terms of the impact on their click-share. Auto Trader suffered a loss prior to the update which could be put down to normal ranking fluctuation, but had similar levels of click-share after the dust had settled, regardless of being the biggest percentage loser.

On the other hand Money.co.uk has had a different turn of fate, taking a significant click-share hit, while MoneySupermarket turns out to be quite the opposite of a loser, even after dropping an abundance of results.

The likelihood is that neither of these site's click-share changes were a result of the Domain Clustering update, but rather impacted positively and negatively by Penguin.

Quantity over quality?

Given our findings, it is definitive that the domain clustering algorithm is now in action and working to reduce the number of times a visitor sees results from a single domain.

But, with authoritative brands retaining their dominant positions on the top pages, does seeing a more diverse set of results through the lower layers of the index really benefit search quality?

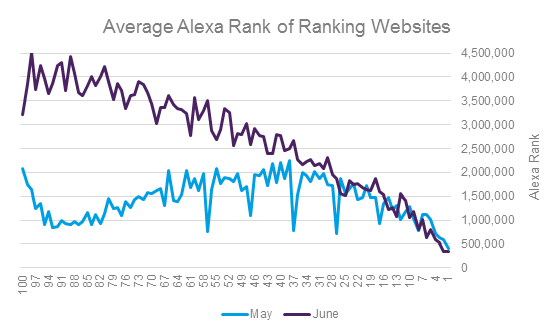

We decided to analyse the shift in site quality since the domain clustering update. As we're attempting to measure the type of site which now ranks we've looked specifically at Alexa Rank, a publicly available measure of a website's traffic.

This gives us an indication if Google has replaced the previous highly trafficked sites with other 'big brands' or with lower quality sites (which typically have a higher Alexa Rank).

The above graph plots the average Alexa Rank, per ranking position (for May and June), of each site that ranked in the top 100 positions across 621 keywords.

Again, the data is extremely conclusive. In May, sites residing in the majority of the top 100 positions (typically past position 20 (page two)) had a significantly lower Alexa Rank in comparison to those ranking now.

On average, those sites ranking in May had an Alexa Rank of 1,437,153 compared with 2,681,099 in June, almost double. This signals that Google is favouring lower trafficked, and likely lower quality, websites in the lower pages of the index over established domains.

Our view is that the update has removed results simply due to the fact the results are from the same website, without any real thought or testing on Google's part, sacrificing quality in return for greater diversity!

I for one would prefer to see more results from highly authoritative, trusted brands.

No hay comentarios:

Publicar un comentario