If you want to know how to make your e-commerce website better, ask the people who use it.

If you want to know how to make your e-commerce website better, ask the people who use it.

Surveys are one of the quickest ways of finding out what matters to your customers, what's missing or broken and what's getting in the way of conversions. They're also a great source of market intelligence.

Add a survey to your site, treat the comments from your customers as if they contained nuggets of gold, and you'll find learn things about your business and your market which no 'best practice' guide could tell you.

Why you need voice of customer surveys

If I had to choose just one optimisation tool to use on an e-commerce site I would install a voice of customer survey even before I implemented Google Analytics, commissioned any usability tests, or ran any split tests.

Of course, in real life we don't have to make that choice and can do all of those. But many sites seem to treat surveys as an occasional afterthought and not a top priority. I think that's a mistake.

It pays to start with a survey straight away for two key reasons:

- With surveys (like usability tests) you start getting useful insights immediately. You don't have to wait for significant results to build up, as you do with web analytics reporting and split tests.

- With surveys your visitors will often point you precisely at what is wrong and tell you what they were thinking and what they were trying to do when it happened. That's way better than puzzling over funnel abandon rates.

As a bonus you also gain great insights into the needs of your visitors and the language they use to express them in.

The more comments you read, the better your understanding of your market will be. This can drive everything from advertising and SEO, through site navigation, to calls to action, and all the way on to product development and merchandising.

This 'listen to your customer' aspect of surveys is hugely important. I started my working life standing behind a shop counter before moving on to working in the call centre. I'm one of the old-school who believe everyone involved in retail should spend time with customers if they can. In my last in-house job I had my desk in the same room as the contact centre for exactly that reason, even though I was running the whole direct sales part of the company.

Where to place the survey

Although there are great systems for allowing feedack surveys on every page of your site, I'm not in favour of using any form of pop-up which might distract your visitor from whatever they want to do.

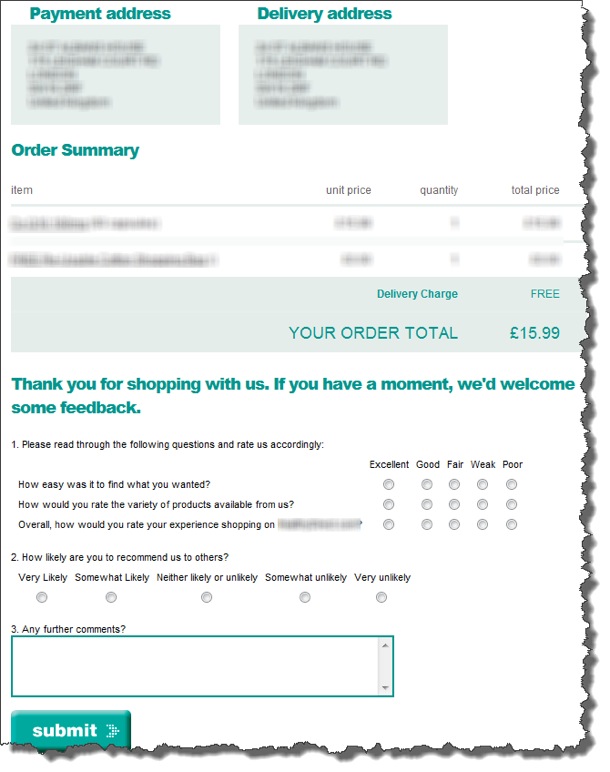

Intstead, my favourite type of e-commerce survey is one embedded in the order confirmation page. I like these because there is zero risk of distracting someone from placing an order since the survey is only offered once the sale is complete.

The obvious objection is that this means you don't get any survey entries from people who did not intend to buy or were unable to buy. That's a common-sense point. But in reality it doesn't seem to be a problem.

I base that claim on the fact that I've been working with such comments from clients sites for many years. During peak periods we will be processing thousands of free-text comments each week.

In real life it turns out that people who have problems buying can be remarkably tenacious. Some will eventually find what they want, or make it through a tricky checkout, and then let you know all about the problems when they get to the survey comments form.

The same rule applies as with usability tests. You can learn a great deal from a very small number of comments.

If just one person provides you with a clue which allows you to identify and fix an obscure bug, then it does not matter that hundreds of other ones only made vague reference to difficulties doing something. You do not have to wait for a statistically significant number of people to be equally precise. One comment can be enough to tell you what to fix.

That kind of thing happens regularly, in my experience. After a few years the 'eureka' moments happen less often. But they still turn up often enough for the process to be well worth it.

Having said that, my advice is that it's important to combine survey comment analysis with other sources of information, such as Google Analytics, in order to prioritise the information.

How to use Google Analytics to prioritise what to fix

Starting from the survey comment about some issue, you should dig down into GA reports and do a bit of forensic work. Using the comment for guidance you can find things which you would never have spotted unless someone had told you where to look.

- Identify the page where the problem occurs in GA.

- Work out what most people do if they don't experience the problem.

- See if the clue has given you enough information to be able to spot the page people end up on after the error. There's often a pattern once you know what to look for. But maybe they just exit.

- Now use GA to work out how many people are affected (Custom Advanced Segments based on stage 1 and 2 above are great for this).

- Compare any relevant conversion rates or micro-conversion rates for people affected by the problem with people not affected.

Assessing the percentage of people affected is important. If you don't do this, it's easy to get the wrong impression from a steady flow of very hostile comments about a particular issue. You start believe that the problem is more significant than it is. I've been caught out this way.

When you see a lot of negative comments it's easy to over-react and become convinced that the site is worse than it is.

Having said that, this would be a good moment to say that you don't only get complaints. It's common for over 50% of the comments to be positive. People are remarkably nice and generous with their praise if all goes well. And many people are supportive even when things have disappointed them.

Positive comments are also very valuable

One great use for positive comments is to circulate them within the team so that everyone knows that their work is appreciated. The more self-service shopping becomes, the fewer people working in companies get the benefit of interacting with customers.

A more commercial use is to mine these positive comments to work out what your customers see as your key strengths and to note the language they use to describe them. What you may think of as your Unique Selling Point may not resonate with your market.

Find out why your customers choose you over your rivals and use that language in your promotional activity.

A special group of comments are the product requests. It's common for customers to suggest things which they would like you to stock. This will vary according to your market. I've seen a regular flow of around 10% of comments being requests for new products in one fast-moving market. That's enough to justify a specific export for the product development team each week.

10% is exceptional, I think. But there's a steady trickle of requests for more products in some ranges, or for discontinued items to be restored, across all the sites I've worked on.

This is the kind of market intelligence you would get routinely in stores and to some extent with telephone orders, but which is hard to get from ecommerce sites. Formal market research projects are very expensive, so it makes sense to tap into this relatively cheap and continuous source.

What to ask in the survey

It's important to keep the survey very short. The more questions you ask, the fewer replies you will get. It's a very clear trade-off.

Avoid using complex options, multiple choices (which take time to read and then think about because none of them are quite right) and dynamic questions. It should be clear at a glance that the survey is very short and is not going to take time.

The highest priority is a free text 'any comments' question.

- Do not complicate or skew this by using words like 'suggestions', 'ideas', 'complaints'.

- Do not offer several different text boxes for different types of comment and expect people to work out what to put where.

Keep it simple. You want the comment. Every bit of complication will reduce the number of comments and restrict what people tell you.

I also think it's good to ask the 'Net Promoter*' question: "How likely are you to recommend this site to a colleague or friend?" with a score from 0 to 10. Full details of how to calculate your Net Promoter Score from the results are explained on the Net Promoter site.

There are three benefits of asking this question.

- It provides a simple overall score which you can use to as a guide to how you're doing. Some people argue that it is too simple, but I'm not suggesting you should use it as any more than a guide and I'm not suggesting it should be your only measure.

- Net Promoter Score provides a benchmark you can use to compare yourself to some other companies which make their scores public.

- Hidden Bonus: by asking people how likely they are to recommend your site, you are planting the idea of doing so. In this day and age you could go the whole way and put Facebook and Google+ buttons right next to the survey form.

Since we're talking about e-commerce sites and the survey is on the 'thank you page' I would also ask two other rating questions:

- "How easy was it to find what you wanted?"

- "How easy was it to use the checkout?"

That's it. Maybe one more question, if you must. Please resist the temptation to ask more questions at this point.

Coming next: how to get the best out of your survey

In part two of this series I'll give you an overview of how to get started. I'll cover some of the systems you can use and give you some tips about useful extra information to collect.

Most important of all, I'll explain why you need to set up a routine business procedure to analyse the comments and give an example of how doing so is vital to getting the best return.

[*Net Promoter, NPS, and Net Promoter Score are trademarks of Satmetrix Systems, Inc., Bain & Company, and Fred Reichheld]

No hay comentarios:

Publicar un comentario