E-commerce voice of customer surveys are a great way of learning what your customers want and what's making it hard for them to buy from you.

E-commerce voice of customer surveys are a great way of learning what your customers want and what's making it hard for them to buy from you.

There's a wealth of optimisation advice and market intelligence to be had, if you just give customers a chance to tell you what they think.

This post gives you a quick overview of how to get started with surveys. It covers the kind of systems you can use and explains some of the valuable details which will give you extra site optimisation information.

I'll also suggest how you can work with the information in a way which will give you maximum benefit.

This is the second part of a two-part series. In part one I explained:

- Why embedded customer surveys are so valuable.

- The questions to ask.

- When to ask them in a way which will never risk your conversion rate.

What to use for the survey form

The system you use does not need to be very sophisticated. The familiar sources of low-cost survey systems, Surveymonkey, Surveygizmo, and Zoomerang are fine for producing surveys which you can embed in your confirmation page.

Another candidate is the general-purpose form builder, Wufoo which I've heard recommended for the flexibility of the form design.

There are more expensive and much more powerful systems from specialist suppliers like iPerceptions, Opinionlab, Foresee and Clicktools which are specifically made for this kind of work and offer all manner of extra benefits, but my recommendation is to start with something basic which is cheap and easy to set up.

It's the same story as with web-analytics: start with something which is simple to implement and inexpensive. Only move up up to one of the expensive solutions once you've established that you're getting benefits and are also pushing the limits of what you can do with your first system.

For reference, there's the Econsultancy Online Surveys and Research Buyer's Guide.

Important: survey comments are a two-way channel which can build repeat sales

One other point about implementation: you will often find that people leave comments which deserve a reply. People may even ask questions. Since these are customers who have just completed an order it is understandable that they feel that they are addressing the company directly.

In which case I think it is wise to make sure that the order reference number and the email address (if possible) are automatically stored as part of the survey data so that you can use the information to send a reply.

Another valuable benefit of storing the email address is that you can use it to send out more generic emails thanking people for their suggestions.

It's a great idea to let people know when improvements to the site have been made as a result of customer ideas, even if the particular issue an individual customer has raised has not yet been solved.

Here's an example of such an email, from the National Trust in the UK:

Don't forget to store some technical information

Bonus implementation tip: you should always store the browser version and operating system if you can. When you're trying to solve obscure problems it is often extremely valuable to group the reports of bugs by browser version.

This can be a great way of identifying browser-specific issues. I've seen this happen again and again...

Build your own survey and you can do more

As well as using standard third-party survey systems, I've also worked with custom-coded surveys which were built into the site itself.

Given that the data being stored is so simple, this is not a complex thing to do. And it brings one big advantage: the data can be stored in the back end system as part of the customer's order history in the same way as such systems commonly store interactions with the contact centre.

This means that you could use some aspects of the survey information to segment your email lists, for example. And it also means that if the customer later phones or emails with another question the contact centre will be able to see the history of problems that the customer has reported via the survey.

People expect this kind of joined-up experience: as far as they're concerned if they've told you about something via one channel then you should 'remember' it when they contact you again.

Integrate with Google Analytics?

Up to now I have delayed integrating survey data with Google Analytics. But you could most certainly pass some of this information to GA or another web analytics system.

Some of the specific feedback systems, like Kampyle and Kissinsights offer this kind of integration. But I don't think it's built into the survey systems like Surveymonkey or Surveygizmo.

If you're using GA the first thing to make clear is that you must not pass any personal identification information such as the email address. But there would be no problem sending things like the Net Promoter Score and the 'navigation' and 'checkout' scores as custom variables.

I would pass the 'navigation' and 'checkout' scores as session variables, since they are specific to the visit. You could then use this information to examine the paths taken by people who had problems with navigation or the checkout in the hope of spotting flaws.

By the way, you might want to combine the two fields in order to save variable slots.

In the case of the 'promoter' question, I suggest that it should be a 'visitor' variable so that you can identify such people (but only as anonymous returning visitors) as high scoring or low scoring.

It might be very useful to identify sources of very favourable customers as well as those which seem to be the origin of people who are less well-disposed.

The secret: how to get most value out of the comments

The first few times you read through the comments from your customers you'll be in for some surprises. Yes, you'll spot things you already know about, or suspected. But you'll also start to get some real "oh wow" moments too.

So at first just scanning through the comments will seem exciting and a source of great ideas. But the novelty will wear off. You'll start to forget things you've read. And then you'll start to forget to actually log in and read the comments because it starts to seem like any other business chore.

In fact it's much better to resist the temptation to just scan. What you need to do it to treat analysing the survey as a formal business process from the start.

To do this you need to import the comments into some off-line processing system. This could be as simple as a spreadsheet, or it could be a simple database of some kind. We use FileMaker Pro for this.

You need to be able to add a sentiment score for each comment: positive, neutral, or negative.

Then you need a way to add tags or flags to each comment to associate it with a set of themes such as: navigation, search, product information, product request, price, delivery cost, cart, address, delivery options, payment, speed, crash, and so on.

One very important extra tag is 'needs follow up.' This assumes you have been able to store an order reference or the customer's email address to allow a follow up.

When we start working on a new site survey we run through a couple of week's worth of comments to decide what the category tags should be. The major themes quickly start to emerge and less than a thousand comments should be plenty. Then we configure our system to make the tag-entry process as simple as possible.

Routine is the key

Analysing survey comments needs to be regular weekly or monthly process. Ideally the same person should do the tagging. There are two key benefits:

- The allocation of tags will be more consistent.

- The person reading all the comments will remember things and make connections between different comments.

Both those are important because they improve two things: reporting on trends and making intelligent connections between a series of comments.

Reporting on trends

Allocating the comments to themes will always be fuzzy. But the more consistent you are the better. You can then calculate the percentage of comments relating to each theme for each week, or other period.

You can then trend these themes over time and use this as a way of spotting when something is going wrong, or if changes you have made are resulting in an improvement.

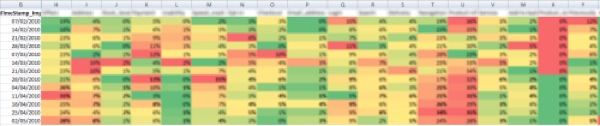

Excel conditional formatting is great for this. We tend to produce a heatmap which shows the areas which are heating up with negative comments:

We also produce an 'alarm' view which highlights themes where the negative comments share is more than one standard deviation above normal:

Making connections

If one person specialises in reading and tagging all the comments each week they will start to build up an understanding of the language people use and the type of things which are being mentioned.

This kind of human instinct for picking up when different people may be talking about the same thing but just using vague language can be really valuable.

Combining consistent analysis using standard procedures with the human insight which comes from a real person working with these comments each week produces great insights.

Again and again there's a 'Eureka!' moment when the person doing the tagging finally sees a comment which reminds them of a string of vague references stretching back over weeks and makes the connection.

Return on investment

As an example of the kind of value you can get out of this process, I'll give you just one case where that human instinct to connect a series of comments paid off. There had been a steady flow of comments referring to an 'editing' aspect of a checkout not working.

- People said they could not edit something once they had entered it.

- It was impossible to replicate. The overlay opened perfectly every time.

- But the comments kept coming.

- The person doing the tagging believed it was happening. That many people can't all imagine the same thing.

- But we could never nail it.

And then along came a weird and convoluted comment which mentioned in passing that the customer had been so frustrated by the problem that they had decided not to buy most of the things they had chosen.

They were so angry that they cut their order down to one item. And then they were even more angry because now the site worked. They ranted that the site seemed to only sell one item at a time.

That was the clue. The attempts to replicate the problem had not involved carts containing a lot of separate items. When the cart screen extended a long way below the fold there was a problem.

This insight was combined with comments about more obvious problems on that page to contribute to a re-design which reduced the abandon rate from that single stage by 30%

It's the process which brings the pay-off

The example above required two step for that big improvement:

- There had to be a channel for the customers to provide the comment.

- A highly motivated person had to study the comments in a methodical way and use experience to make the analysis.

The second step is just as important as the first. The final convoluted comment which triggered the change would have been passed-over with shrug by someone just scanning through on a random basis. It's not enough to just provide the feedback channel.

But the real lesson from all of this is the old one: "listen to your customers." With the emphasis on the listening, not on the technology.

No hay comentarios:

Publicar un comentario