Your adverts and their messaging are integral to your PPC success. Like the campaign itself, they need constant optimisation, revision and testing.

Planning is probably the most important part of ad text testing. Without a solid plan you are simply going to stick a load of messages out there and see what comes back.

This can lead to unfair testing practices and ultimately, worse results.

Find out what you should be considering when it comes to ad text testing...

How to know which messaging to use?

Planning is probably the most important part of ad text testing. Without a solid plan you are simply going to stick a load of messages out there and see what comes back. This can lead to unfair testing practices and ultimately, worse results.

Start by identifying the best features, benefits and USPs. These should always follow the advice of the client, preferably someone on their sales team.

No matter how integrated you get within a company whilst managing their PPC, they are ultimately going to be the experts at selling the service. Write some solid calls-to-action and think about any other messaging you might want to include.

Understand the potential customer and the tone that you should use. Can you use a light-hearted, humorous message or should it be strictly business?

Remember, you don't need to test everything from day one. Think about what is a suitable test at this stage. You will need additional messages to come back to in the future so make sure not to exhaust your testing strategy just yet.

How to test messaging across ad groups

Now that you have your messaging laid out on a plate, think about how this can be tested across multiple ad groups. Let me start by advising on exactly what you shouldn't do...

Imagine a small section of an AdWords account: you have two ad groups and you want to test two different messages. One of your messages is focused on 'Great Deals', the other focuses on 'Luxury'.

Seems like a sensible test, you are looking to see whether price or quality is more important to your customers.

However, now consider the fact that Ad Group one contains keywords related to 'Quality' and Ad Group two contains keywords focused on 'Cheap'. This suddenly makes the test wickedly unfair.

The 'Great Deals' ads are bound to do better in the 'Cheap' group, likewise the 'Luxury' ads will perform better in the 'Quality' group. The outcome of the test is therefore skewed.

If you are aggregating the results of both ad groups it may well come down to whichever ad group has more volume in determining the ultimate winner.

The only way to properly analyse these results would be by treating each ad group individually.

The point that is being made here is that you need to ensure that the user intent is very similar across all ad groups where you will be testing the same set of ads. And a separate test should be conducted for every variance of audience profile.

Which metrics should I measure?

This is something else that is regularly overlooked. It is easy to focus on CTR as always being the most important metric, but this is not always the case.

The correct answer is that it completely depends on the nature of your account. If you are currently over-performing on conversions and want to drive more volume then CTR could be best.

If your CPA is struggling then conversion rate may be the best. However, don't forget to look at other typical KPIs such as time on site, average order value or bounce rate.

How to test with constant sales and promotions

One apparent spanner-in-the-works with ad testing can be when a client decides to have a sale. You suddenly have to ditch all your old ads and introduce new ones for the purposes of promoting their offers.

However, your ads may require a revamp but your testing policy should not. You can still learn what works in this sale period and use that to influence the following sale.

Even if a client has regular intermittent sale and non-sale periods you can still make some great findings from these to influence your ads.

How Quality Scores impact a test

Something to be aware of is that new ads will often suffer from a slightly lower quality score than existing ones.

This makes sense when you think of it from a Google point of view: a new ad may be poor, therefore Google doesn't want to really give it any major exposure until we have some proof that it is going to work – e.g. it has established a decent CTR.

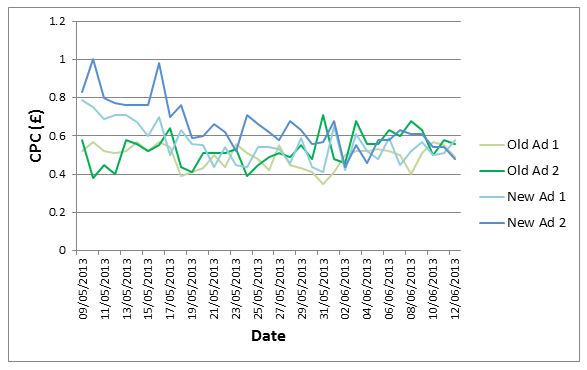

Therefore your quality scores may be below average for a few days before settling down in-line with the rest of the account. Here is an example of that happening:

The new ads were introduced at the start of this graph, and we can see it takes approximately 10 days before they start to perform similarly to the existing ones.

With a lower quality score, your ads are likely to show in slightly worse positions and may not be eligible to show all of the ad extensions that they would with a better quality score. The main thing to bear in mind is that you should do some analysis similar to the above.

This will allow you to work out when you have more steady quality scores in a test. Then you should only test the data from this date onwards.

So now I have perfect ads, right?

Hopefully nobody has ever actually asked that, but I just want to make the point that you will never have a perfect ad. There is always something that can beat it.

And even if it is (through some miraculous occurrence) the perfect ad, it won't be for long. As other advertisers change their ads, Google changes its natural listings, and the layouts of the SERPs are altered, users will respond in different ways.

I find the best way to continuously test ads is by starting off with wildly diverse ads and work out what will perform best from these. Once you are happy that you have tried a wide variety of different concepts for the ads, it is then time to start refining them.

Try changing your sentences and the order of words, tweak some of the wording, alter the capitalisation and experiment with punctuation. There are always new things to test!

No hay comentarios:

Publicar un comentario